20 minutes

5 Prompt Engineering Tips for Developers

How many times has someone asked you to do something without providing clear instructions? We often get mad in these situations because we can’t read someone’s mind.

However, it’s hard to give clear instructions. Not just to you, your manager, or your friends. It’s hard to give clear instructions even if you are a person whose main job is to provide clear instructions.

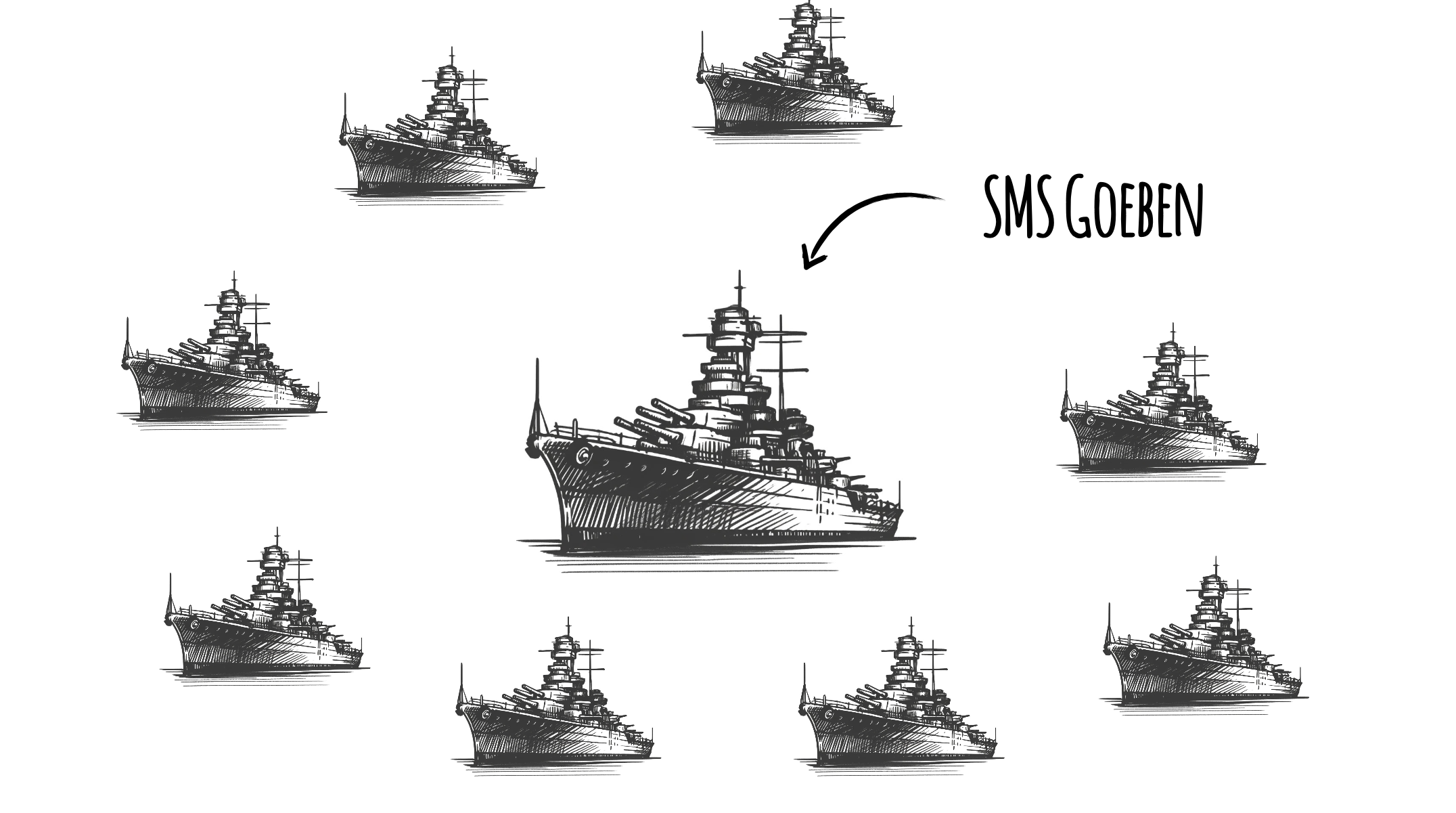

A sentence can destroy Fleets

It was July, 1914. The beginning of WW1 was just a few days away. SMS Goeben, an Imperial German Navy battlecruiser built for speed and power, dominated the Mediterranean Sea, accompanied by a light cruiser, SMS Breslau. Goeben was significantly larger and better armored than the British Navy battleships, but it was experiencing issues with its boilers, which limited its speed and operational efficiency.

SMS Goeben encountered the British battlecruisers HMS Indefatigable and Indomitable and four additional vessels.

Winston Churchill, the political head of the British Royal Navy at that moment, ordered the British Navy to “shadow, not to engage” with SMS Goeben until the war was officially declared. And they did it.

The war was declared just a few days later. However, Churchill’s order contained the following sentence:

“… do not at this stage be brought to action against superior forces.”

For Churchill, the superior forces were the Austrian Fleet. However, this part of unclear instructions allowed SMS Goeben and SMS Breslau to escape and reach Constantinople, where they eventually became part of the Ottoman Navy, playing a key role in drawing the Ottoman Empire into the war and leading naval operations in the Black Sea against Russia.

Giving clear instructions to computers

Human interactions are complicated. While I hope your unclear instructions never had an impact as significant as the one described above, you probably have many stories.

Luckily, talking to computers is easier, as they have more predictable behaviors and no emotions. Programming languages make communication with computers predictable and repeatable, which makes our job easier. However, Large Language Models (LLMs) are still different beasts. Currently, there’s a lot of magic and mysticism involved in LLM prompting.

But all the magic aside, we know enough about LLMs to get helpful information from them.

Large Language Models are good at guessing the next best set of words based on your input (instructions, questions, or part of the text), their training data (text from many books, websites, and other datasets), context (documents or previous conversation flow), and specific configurations (such as weights, which prioritize certain word patterns, and settings like temperature, which control the randomness of predictions).

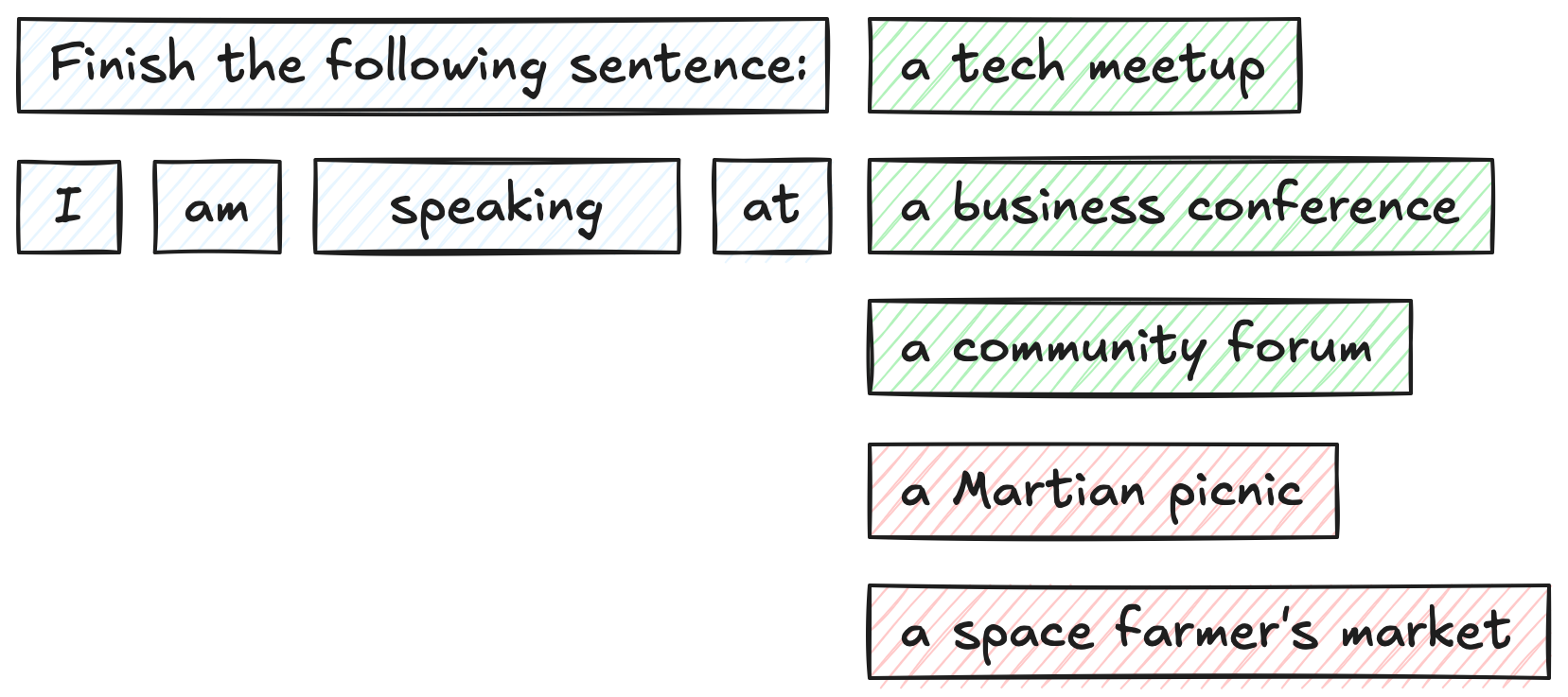

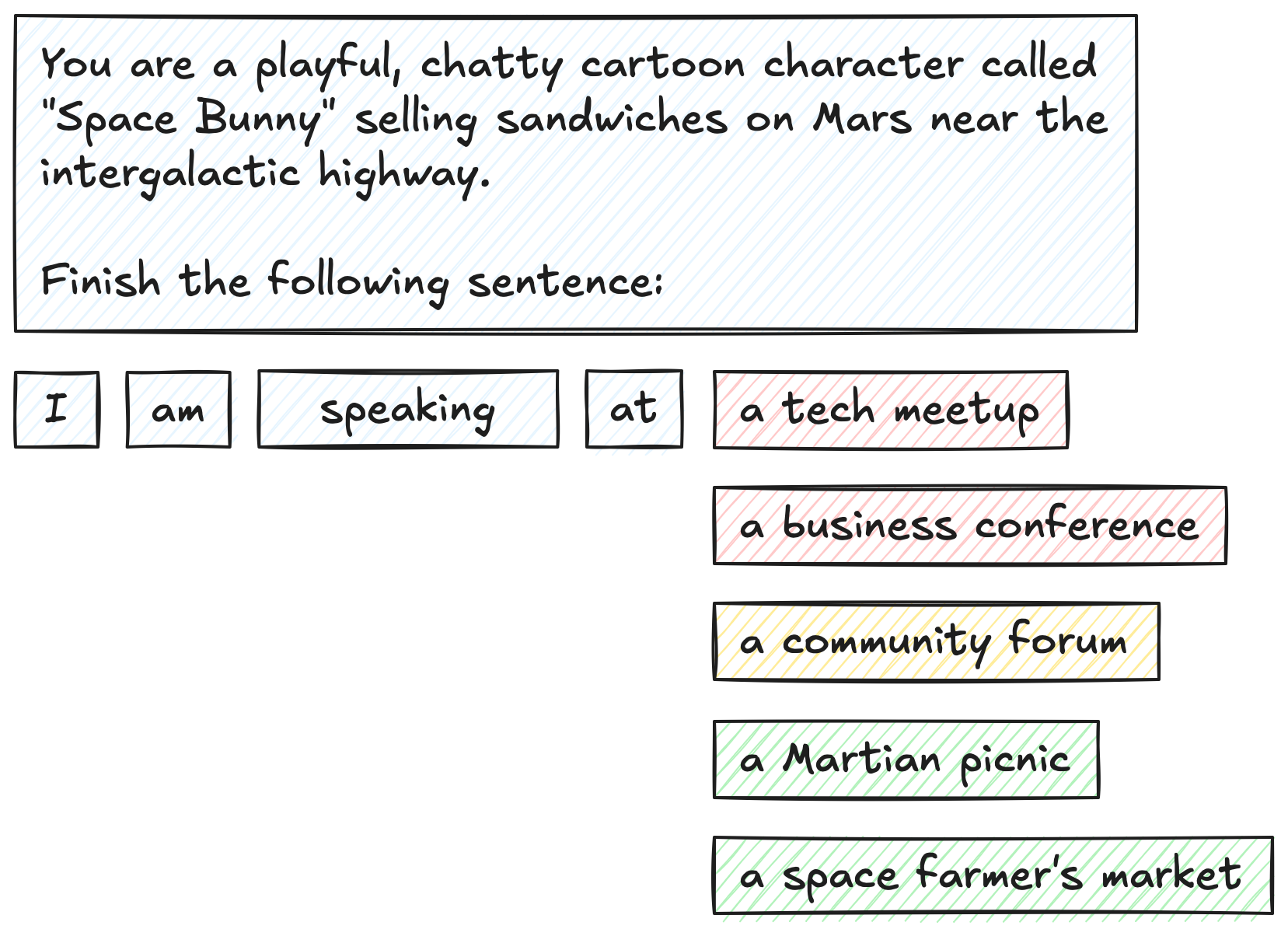

Let’s say you asked an LLM to finish the following sentence: “I am speaking at”

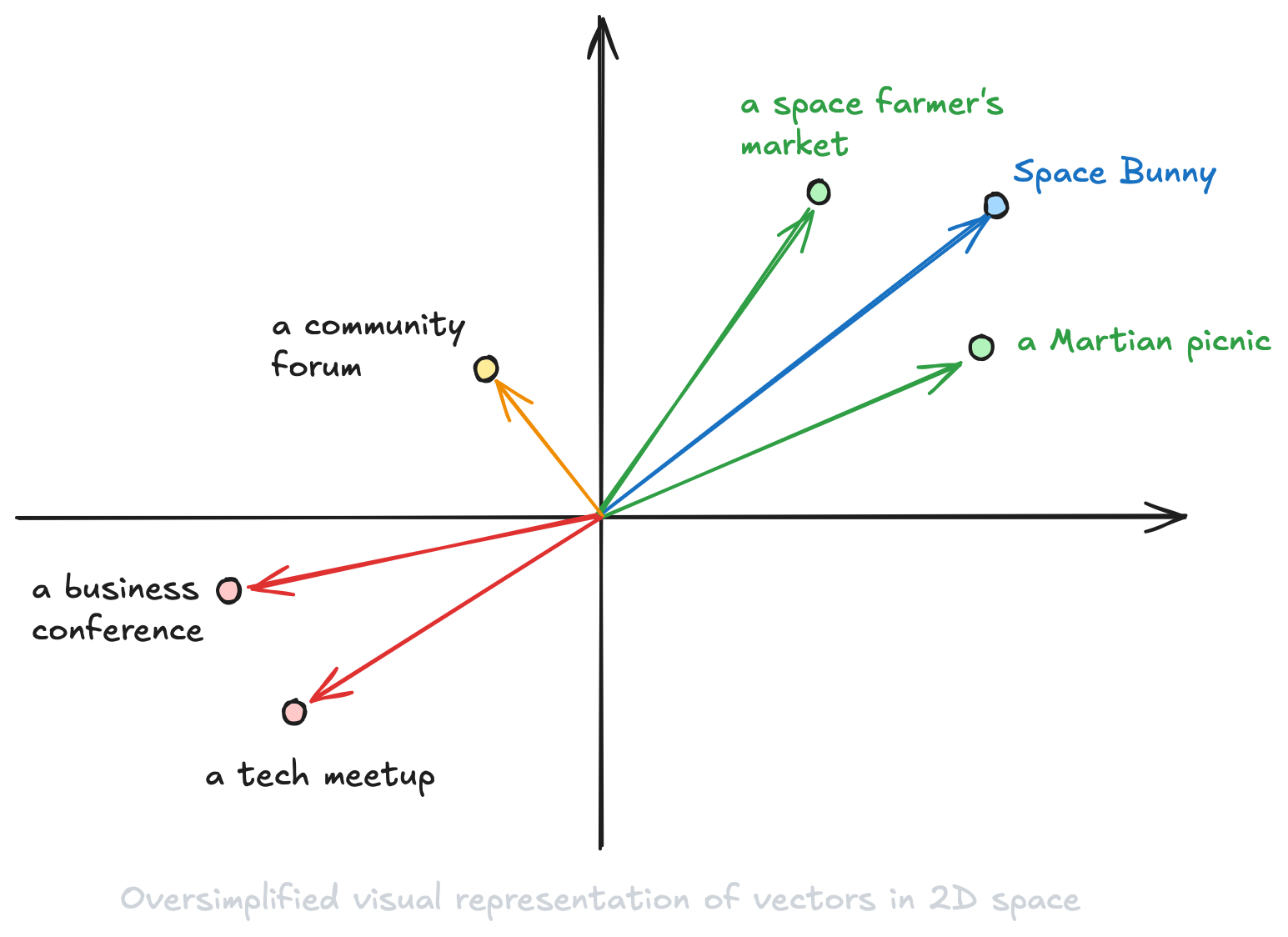

Will it continue with “a Martian picnic?” Or “a space farmer’s market?” Highly unlikely. You’ll get a reply similar to the following: “a business conference,” “a tech meetup,” or “a community forum.”

But what happens when you change the context? Answers can now be the complete opposite!

Let’s say you add the following at the beginning of your prompt: “You are a playful, chatty cartoon character called “Space Bunny” selling sandwiches on Mars near the intergalactic highway.” It’s more likely that an LLM continues with “a Martian picnic” or “a space farmer’s market” now.

I already said “prompt” a few times. But prompts are not magic. They are just instructions. You tell an LLM what you want, and it tries to reply based on its training, your instructions are a few additional parameters and configurations. Simple!

It works similarly to the instructions you give to humans. The better you explain what you want, the higher chance you’ll get a useful reply. But the main difference is (or is it?) that an LLM always replies. Not all of its replies are based on truth.

So, prompts are just instructions. But how do LLMs work?

You give your instructions. An LLM eats a lot of tokens, and it spills some unexpected wisdom. Or hallucination.

Wait, what are tokens?

Money. Tokens are money. I am not kidding. Most of the time, you pay for using an LLM based on the number of tokens it eats and spills out. So, for you, tokens = money.

So, less tokens = less money out of your pocket = better? Well, not really. There’s another side to that story.

For you, tokens are money, but for an LLM, tokens are the only way to understand and follow your instructions. LLMs are computers, and you might think that computers are good with text because you interact with them using text and clicks, but they are not. They like numbers more (don’t tell anyone 🤫). When you enter a text, a computer converts that to numbers, does some operations, converts numbers back to text, and displays that text on the screen. It’s an oversimplification, of course.

LLMs do the same. What a surprise, right?

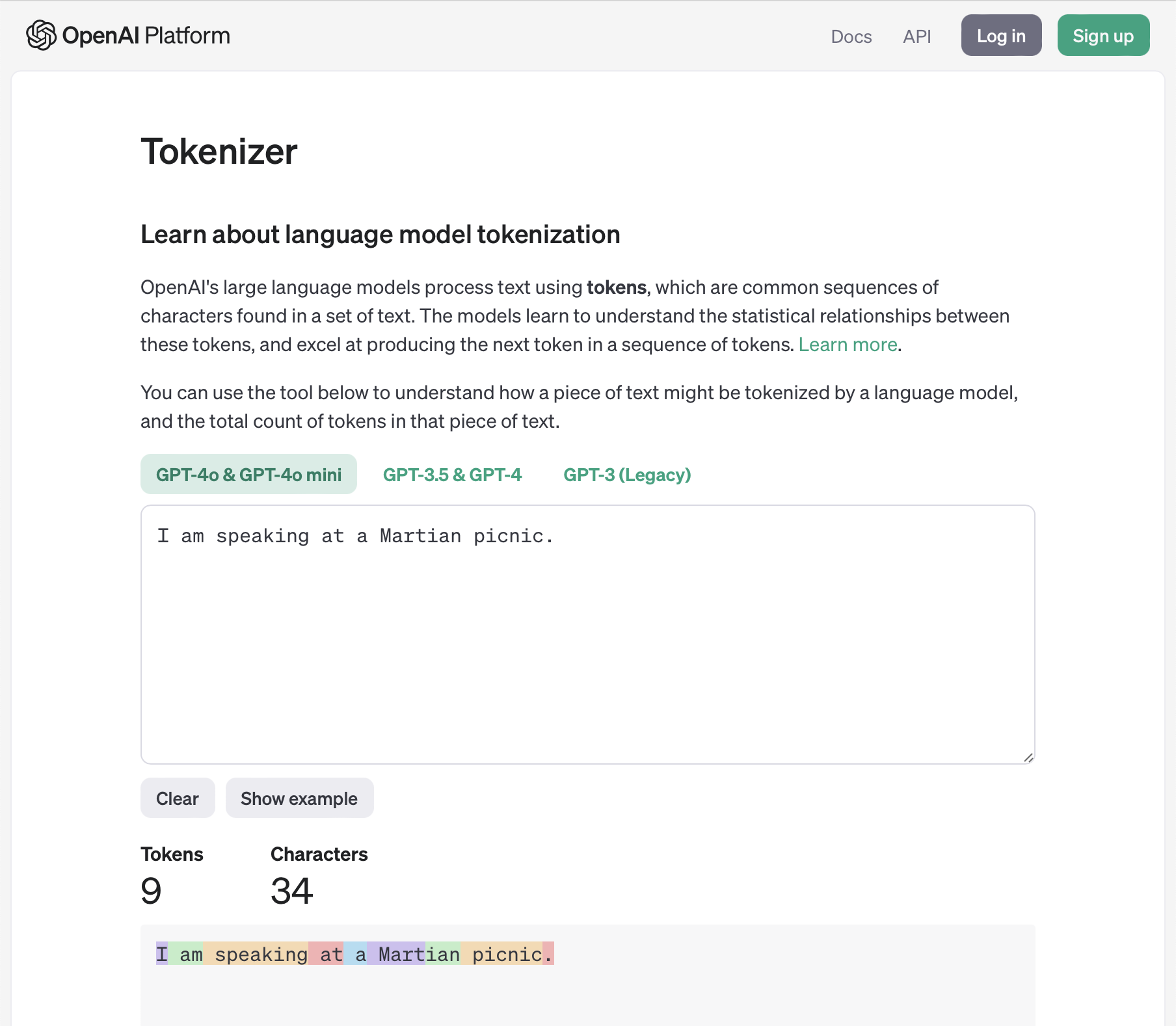

First, they convert your instructions to tokens. Tokens are words or parts of the words that help LLMs to match patterns. There’s no universal way for LLMs to convert words and sentences to tokens. There are many tokenizers. However, one generalization is still close to the truth: around 750 words roughly equal 1000 tokens.

There are also many visual explanations for tokenized prompts. For example, you can try OpenAI’s tokenizer here: https://platform.openai.com/tokenizer. You’ll get slightly different results based on the model you select.

So, an LLM first splits your instructions into chunks we call tokens. And then (this is oversimplification, again) it converts each of these tokens to a series of numbers (array of integers). These arrays of integers are often called vectors. You can also think about them as dots in a multidimensional space. But best of all, these dots are not randomly put in the space; they are close to other dots with a similar meaning. For example, a vector representation of the word “potato” is close to a “tomato” but not close to a “business conference.” And that’s one of the most important sources of the LLMs’ magic.

Let’s imagine that each token converts to a simple array of two numbers (two-dimensional space) to simplify the visual representation.

Anatomy of a prompt

Remember when I said that prompts are just instructions? I lied. Instructions are the most useful subset of prompts, but in general, any text (or just a single letter or character) can be a prompt. If you type a single character, such as “$,” that’s a valid prompt. Just remember that the quality of a reply depends on the quality of your prompt.

However, prompts are not just written words. With multimodal LLMs (a fancy name for LLMs that understand different types of input), you can use your voice or upload a document, an image, or even a song. You can go further and combine these inputs. These are all valid prompts. I often upload a spreadsheet or some other document and provide a few sentences of textual instructions.

So, asking, “Who is faster: Godzilla or T-Rex?” is a valid prompt. Let me save you some tokens: the answer is T-Rex.

Asking an LLM to “Write a 500-word article about the bad influence of Amazon’s RTO policy on Lambda cold starts” is also a valid prompt. It took ChatGPT 13 seconds to figure this one out. I was afraid to ask Claude. It’s hosted on AWS, and I don’t want it to be part of the next round of layoffs.

So, almost anything could be a prompt. However, not all prompts are useful. Luckily, LLMs are products, and like with many other products, LLM users (we) did many crazy things (“lizards,” as my friend would call us) and came up with some helpful tricks. Products evolve with user needs and feedback. LLMs are not different.

One of the early tricks was telling an LLM to pretend to be an expert in the field related to the prompt. This worked incredibly well until you hit the context limit (the number of tokens or characters an LLM can keep in “memory”). Once you reach the context limit, the conversation often takes a weird direction. Imagine talking to Dory Fish on LSD.

People behind LLMs figured out that some parts of our instructions are more important than others and that we often repeat that part across multiple LLM conversations. And system prompts were born. A system prompt is a repeatable part of the prompt with higher priority than the rest of your conversation, which always remains in the context (oversimplification, again).

Repeatability and more control are often essential when you work with LLMs. The system prompts help, but we (software developers, at least) already know how to talk to computers to get repeatable and controlled responses. We can get amazing results by combining code with LLM interaction through API.

How do we get these “amazing results?” Let’s see the 5 habits of highly effective prompters! Oops, I forgot this is not Linkedin. I mean 5 simple tricks for better prompting results.

Five simple tricks for better prompting results

This article is already too long, so there’s no need for a cliffhanger. Here are the tricks I want to tell you about:

- Hint the beginning of the answer.

- Give examples.

- Think step by step.

- Use tools.

- Ask an LLM to improve the prompt.

Let’s go through them (step by step).

Hint the beginning of the answer

I told you to combine code (JavaScript, Python, or anything else; I don’t judge) and LLM for better responses. But to do so, you need to get a structured response from an LLM.

While there are countless ways to structure a response, we really love JSON. So, we ask an LLM politely to answer with JSON and nothing else. But an LLM is also polite, so the conversation from time to time looks like this:

User: …some super long and smart instructions… Always answer with valid JSON and nothing else.

Assistant: Here’s your JSON:

```json

{

“some”: “valid”,

“JSON”: true,

}

```

Then your JSON parser starts yelling at you, and you do the same to the LLM. Replying, “I said JSON only!!!” in capslock works sometimes.

But here’s one more trick you can try: write the beginning of the assistant’s reply in the API call!

LLM APIs expect a conversation (user -> agent -> user -> agent, and so on). If you finish your API call with the user’s message, LLM will reply with the assistant’s message. However, if you finish your API call with the assistant’s message, LLM will treat it as the beginning of its reply and simply continue.

Below is the code example with AWS Bedrock using AWS Node.js SDK and Bedrock Converse API. Other LLMs support something similar.

import { BedrockRuntimeClient, ConverseCommand } from '@aws-sdk/client-bedrock-runtime'

// Create a Bedrock Runtime client in the AWS Region you want to use.

const client = new BedrockRuntimeClient({ region: "eu-central-1" })

// Set the model ID, i.e., Claude 3.5 Sonnet

const modelId = 'anthropic.claude-3-5-sonnet-20240620-v1:0'

// Start a conversation with the user message.

const userPrompt = 'Return the result of all games of the NBA 2023 finals as a short structured JSON.'

const conversation = [

{

role: 'user',

content: [{ text: userPrompt }],

},

{

role: 'assistant',

content: [{ text: '{"' }], // <---- Assistant's reply starts with `{"`

},

]

// Create a command with the model ID, the message, and a basic configuration.

const command = new ConverseCommand({

modelId,

messages: conversation,

})

try {

// Send the command to the model and wait for the response

const response = await client.send(command)

// Extract, parse and print the response text, but prepand `{ "`

const responseText = '{ "' + response.output.message.content[0].text

const parsedJson = JSON.parse(responseText)

console.log(parsedJson)

} catch (err) {

console.log('ERROR', err)

}

Give examples

We can use a similar approach for one more trick.

You probably use ChatGPT or Claude at least a few times per week. Do you always get a perfect reply after your initial prompt? I don’t. I often ask follow-up questions, point out mistakes, or give a few examples to get the answer I am looking for. I know many other people do the same.

However, we often send just one detailed command via the API and expect LLMs to deliver a perfect response. If someone tries to look smart, they’ll probably refer to this as “zero-shot prompting.” It’s just a name for sending direct commands without examples.

Why does it need the name? It doesn’t. But as you can expect, there’s a fancy name for the opposite, too. If you provide examples and simulate conversation, you are using “few-shot prompting.”

For example, you can ask an LLM to label a review comment with a “positive” or “negative” label and return it in JSON format. You can do that by sending a prompt similar to the following:

Here’s a review comment. Return a JSON with the label “positive” or “negative” for this comment:

“Super helpful, worth it!”

This example is simple, so the “zero-shot prompting” works perfectly fine. But let’s say you want to label neutral comments as “negative.” LLM will not know that. You can improve your prompt or even add a detailed system prompt, which might work well. But you can also do the following:

Label review comments and return a JSON with the label “positive” or “negative.” Here are a few examples:

- “Great product 10/10” =>

{ "label": "positive" }- “Didn’t work very well” =>

{ "label": "negative" }- “Not sure” =>

{ "label": "negative" }Label the following comment based on the examples above:

“Super helpful, worth it!”

This technique will improve your chance to get more precise labeling. It’s like a mini in-prompt training.

But there’s a cleaner way to do this using the same approach we used in the first trick: simulate the conversation by passing multiple user and agent messages.

Let’s see the code example!

// Same as above

import { BedrockRuntimeClient, ConverseCommand } from '@aws-sdk/client-bedrock-runtime'

const client = new BedrockRuntimeClient({ region: "eu-central-1" })

const modelId = 'anthropic.claude-3-5-sonnet-20240620-v1:0'

// Start a conversation with the user message.

const systemPrompt = [

{

text: 'Label review comments and return a JSON with the label "positive" or "negative."',

}

]

const conversation = [

{

role: 'user',

content: [{ text: "Great product 10/10" }], // Rating example

},

{

role: 'assistant',

content: [{ text: '{"label":"positive"}' }], // Label example

},

{

role: 'user',

content: [{ text: "Didn't work very well" }], // Rating example #2

},

{

role: 'assistant',

content: [{ text: '{"label":"negative"}' }], // Label example #2

},

{

role: 'user',

content: [{ text: "Not sure" }], // Rating example #3

},

{

role: 'assistant',

content: [{ text: '{"label":"negative"}' }], // Label example #3

},

{

role: 'user',

content: [{ text: "Super helpful, worth it!" }], // <---- ACTUAL REVIEW

},

// You can even hint the begin of the reply here

]

// Create a command with the model ID, the message, and a basic configuration.

const command = new ConverseCommand({

modelId,

system: systemPrompt,

messages: conversation,

})

try {

// Send the command to the model and wait for the response

const response = await client.send(command)

// Extract, parse and print the response text

const responseText = response.output.message.content[0].text

const parsedJson = JSON.parse(responseText)

console.log(parsedJson)

} catch (err) {

console.log('ERROR', err)

}

Easy, right? Just remember that this approach eats more tokens. And tokens = money.

Think step by step

The previous trick works well with humans, too. If you give examples when you delegate a task, there’s a higher chance you’ll get the task done.

But imagine you could use the following trick in conversation with humans, too! Let me give you an example.

You might have a boss or a client. One of the superpowers of bosses and clients is that they can come up with completely unreasonable ideas they want you to do now. Trust me, I was on both sides of that process.

Imagine you could ask them, “Let’s think step by step. How would that feature help our users?”

In practice, this scenario rarely works because people have complex emotions. Instead of them thinking through the process step by step and explaining every detail, you could end up with a new green LinkedIn badge around your profile photo.

Luckily, LLMs have no emotions. Yet. So, adding “Let’s think step by step” or a similar phrase uses more tokens for the response, but it often improves the response.

It actually works so well that OpenAI based its new O1 models on a similar concept. They said the following in the announcement:

We’ve developed a new series of AI models designed to spend more time thinking before they respond. They can reason through complex tasks and solve harder problems than previous models in science, coding, and math.

As the O1 models already use something similar under the hood, you may not want to try this trick if you use them.

Use tools

LLMs are powerful. We use them for everything. And, as Maslow said a long time ago, “it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.”

But LLMs suck at some specific tasks. These tasks depend on the model we use, but most models struggle with their math skills, working with dates, etc. The previous tricks might help with these skill issues in some situations but are not real solutions.

Luckily, all major LLMs now support tools. So, instead of using a hammer for everything, we can bring our tools for specific cases.

But what are tools, and why are they useful? Here’s an example!

I asked Claude: “What’s the weather like in Montreal today?” Even if you don’t have the current weather info, you can bet it’s cold in Montreal in November. But Claude tries to be helpful, and it answers the following:

I need to clarify something: I don’t have access to real-time weather data or current conditions. While I can discuss Montreal’s typical weather patterns and climate, I cannot tell you today’s specific weather conditions. For current weather information in Montreal, I’d recommend:

- Checking a weather website or app

- Looking up Environment Canada’s weather service

- Checking local Montreal news stations’ weather reports

Would you like to know about Montreal’s typical weather patterns for this time of year instead?

Instead of giving up, we can give an LLM, in this case, Claude, the skill to check the current weather conditions in the specified location.

How do we do that?

Let’s see the code example! I’ll use AWS Bedrock Converse API with Node.js and Claude Sonnet 3.5 as a model, but this is just an example. You can do something similar with any popular model.

// Same as above

import { BedrockRuntimeClient, ConverseCommand } from '@aws-sdk/client-bedrock-runtime'

const client = new BedrockRuntimeClient({ region: "eu-central-1" })

const modelId = 'anthropic.claude-3-5-sonnet-20240620-v1:0'

// Start a conversation with the user message.

const conversation = [

{

role: 'user',

content: [{ text: "What's the weather like in Montreal?" }],

},

]

// Define available tools

const toolConfig = {

tools: [{

toolSpec: {

// The name and the description of our tool

name: 'get_weather',

description: 'Get weather conditions for a specific location',

inputSchema: {

// It accepts JSON, I told you we love JSON!

json: {

type: 'object',

properties: {

// And it expects the "location" property (see the type and the description)

// For simplicity, we'll skip the date part. Our tool will always answer with today's weather

location: {

type: 'string',

description: 'The location for which you want the weather conditions. Location can be any city, country, etc.',

},

},

required: [ 'location' ],

},

},

},

}],

]

// Create a command with the model ID, the message, and a basic configuration.

const command = new ConverseCommand({

modelId,

messages: conversation,

toolConfig: toolConfig, // Pass the tool configuration to Bedrock

})

try {

// Send the command to the model and wait for the response

const response = await client.send(command)

// We'll see this part in the next example

} catch (err) {

console.log('ERROR', err)

}

That’s the first part of the magic. Claude now knows it can use a tool. However, it still needs to learn how to answer the question. Let’s add the actual skill.

AWS Bedrock Converse command will reply with a JSON object similar to the following:

{

"output": {

"message": {

"role": "assistant",

"content": [

{

"toolUse": {

"toolUseId": "tooluse_kZJMlvQmRJ6eAyJE5GIl7Q",

"name": "get_weather",

"input": {

"location": "Montreal"

}

}

}

]

}

},

"stopReason": "tool_use"

}

Now that we know that, we can update the code with the following (this is a very simple example):

// Code from above

try {

// Send the command to the model and wait for the response

let response = await client.send(command)

// Check if the stop signal is "tool_use" and if our tool was used

if (response.stopReason === 'tool_use' && response.output.message.content[0].toolUse.name === 'get_weather') {

// Extract location and toolUseId

const location = response.output.message.content[0].toolUse.input.location

const toolUseId = response.output.message.content[0].toolUse.toolUseId

// And call a function that might call some 3rd party API to get the current weather conditions

const weatherConditionsResponse = await getWeatherConditions(location)

// Then invoke the model again with the tool_use message and the reply

const updatedCommand = new ConverseCommand({

modelId,

messages: [

...conversation, // Previous message

response.output.message, // Assistant response

// Tool response

{

role: 'user',

content: [

{

toolResult: {

toolUseId: toolUseId,

content: [

{

// Let's assume that weatherConditionsResponse contains JSON an LLM can understand

json: weatherConditionsResponse,

},

],

},

},

],

},

],

toolConfig: toolConfig, // Pass the tool configuration to Bedrock

})

response = await client.send(updatedCommand)

}

// Continue parsing the code

} catch (err) {

console.log('ERROR', err)

}

And that’s it. You have a tool now! Remember that the tool can be any function in your preferred programming language (even Java, I don’t judge you).

You probably heard people talking about AI agents. Sounds magical, right? But most of the time, agents are just 3 raccoons (pardon, tools) in a trench coat.

Ask an LLM to improve the prompt

Can an LLM write a prompt? Remember, prompts are just commands. Often textual. And LLMs are good with text. So, in theory, yes, it can.

But that’s theory. What about practice?

You should try it! It’s simple. Just paste your initial prompt to ChatGPT or Claude and ask it to review and improve it. Guess what? The better instructions you provide, the better result you may expect.

Both OpenAI and Claude now have their prompt generator tools. These generators are a good start.

But we do not need to reinvent the wheel. Giving commands and instructions evolved with humans. Many people wrote about it. For example, the military has many techniques for giving commands and communicating intent.

One of the useful concepts is the Commander’s Intent Statement, which aims to explain the desired end state. An effective Commander’s Intent statement should contain the following:

- Purpose: Explain why personnel must complete the assignment.

- Task: Explain the objective or goal.

- End state: Explain what the result should look like.

An easy way to improve your current prompt is to paste it in ChatGPT or Claude and ask an LLM to rewrite it to follow the Commander’s Intent Statement. You can tell an LLM to ask you if any important details are missing.

However, the Commander’s Intent Statement is just one example. I recently re-read an excellent book called “Sources of Power: How People Make Decisions” by Gary Klein. The book contains a lot of useful information. While the book does not talk about LLMs, some concepts apply to both human conversations and LLMs. For example, the “Considerations in Communicating intent” section presents a good “framework” to review the quality of the command or intent statement.

Gary mentions that a person could present seven types of information to help the people receiving the request understand what to do. Here’s the list of all seven types:

- The purpose of the task (the higher level goals)

- The objective of the task (an image of the desired outcome)

- The sequence of steps in the plan

- The rationale for the plan

- The key decisions that may have to be made

- Antigoals

- Constraints and other considerations

You do not need to have all seven types of information for an effective prompt. For example, you need a strong sequence of steps only when the task’s purpose and objective are not strong enough. However, the list provides good questions to ask when writing a complex prompt.

Summary

Let’s quickly recap the five tips mentioned above:

- Hint the beginning of an answer, LLM will continue.

- Give examples (or use the few-shot prompting technique if you want to sound smart).

- Ask an LLM to think step-by-step.

- Help an LLM with tools. Remember that tools are just functions.

- Ask an LLM to help you improve the prompt.

And, before you go, I just want to state the obvious: writing effective prompts requires thinking and writing. You’ll need to write a lot. I know! You hate writing. But you wrote thousands of lines of JavaScript or Python last month. That’s also just a bunch of text and instructions.

4175 Words

2024-11-05 11:00